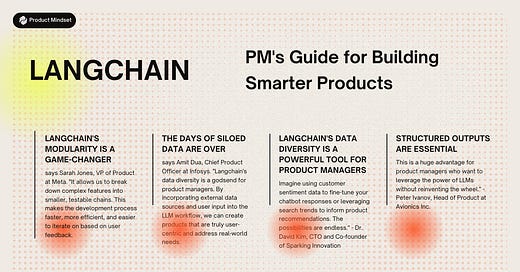

LangChain: PM's Guide for Building Smarter Products

✨ Introducing our new newsletter series: LangChain for Product Managers

LangChain

LangChain is a framework for developing applications powered by language models. It enables applications that:

Are context-aware: connect a language model to sources of context (prompt instructions, few shot examples, content to ground its response in, etc.)

Reason: rely on a language model to reason (about how to answer based on provided context, what actions to take, etc.)

At its core, LangChain is a framework built around LLMs. We can use it for chatbots, Generative Question-Answering (GQA), summarization, and much more.

The core idea of the library is that we can “chain” together different components to create more advanced use cases around LLMs. Chains may consist of multiple components from several modules:

Prompt templates: Prompt templates are templates for different types of prompts. Like “chatbot” style templates, ELI5 question-answering, etc

LLMs: Large language models like GPT-3, BLOOM, etc

Agents: Agents use LLMs to decide what actions should be taken. Tools like web search or calculators can be used, and all are packaged into a logical loop of operations.

Memory: Short-term memory, long-term memory.

Core concepts of LangChain

LangChain's architecture is built on the concept of components and chains. Components represent reusable modules that perform specific tasks, such as processing input data, generating text formats, accessing external information, or managing workflows. Chains are sequences of components that work together to achieve a broader goal, such as summarizing a document, generating creative text formats, or providing personalized recommendations.

Components and modules

In LangChain, the terms "components" and "modules" are sometimes used interchangeably, but there is a subtle distinction between the two:

Components are the core building blocks of LangChain, representing specific tasks or functionalities. These are typically small and focused and can be reused across different applications and workflows.

Modules, on the other hand, combine multiple components to form more complex functionalities. LangChain even provides standard interfaces for a few of their main modules, including memory modules (a reusable building block that stores and manages data for use by large language models) and agents (a dynamic control unit that orchestrates chains based on real-time feedback and user interaction).

Like components, modules are reusable and can be combined together to create even more complex workflows. This is called a chain, where sequences of components or modules are put together to achieve a specific goal. Chains are fundamental to workflow orchestration in LangChain and are essential for building effective applications that handle a wide range of tasks.

Integration with LLMs

LangChain seamlessly integrates with LLMs by providing a standardized interface. But LangChain's integration with LLMs goes beyond simply providing a connection mechanism. It also offers several features that optimize the use of LLMs for building language-based applications:

Prompt management: LangChain enables you to craft effective prompts that help the LLMs understand the task and generate a useful response.

Dynamic LLM selection: This allows you to select the most appropriate LLM for different tasks based on factors like complexity, accuracy requirements, and computational resources.

Memory management integration: LangChain integrates with memory modules, which means LLMs can access and process external information.

Agent-based management: This enables you to orchestrate complex LLM-based workflows that adapt to changing circumstances and user needs.

Workflow management

In LangChain, workflow management is the process of orchestrating and controlling the execution of chains and agents to solve a specific problem. This involves managing the flow of data, coordinating the execution of components, and ensuring that applications respond effectively to user interactions and changing circumstances. Here are some of the key workflow management components:

Chain orchestration: LangChain coordinates the execution of chains to ensure tasks are performed in the correct order and data is correctly passed between components.

Agent-based management: The use of agents is simplified with predefined templates and a user-friendly interface.

State management: LangChain automatically tracks the state of the application, providing developers with a unified interface for accessing and modifying state information.

Concurrency management: LangChain handles the complexities of concurrent execution, enabling developers to focus on the tasks and interactions without worrying about threading or synchronization issues.

LangChain use cases

Applications made with LangChain provide great utility for a variety of use cases, from straightforward question-answering and text generation tasks to more complex solutions that use an LLM as a “reasoning engine.”

Chatbots: Chatbots are among the most intuitive uses of LLMs. LangChain can be used to provide proper context for the specific use of a chatbot, and to integrate chatbots into existing communication channels and workflows with their own APIs.

Summarization: Language models can be tasked with summarizing many types of text, from breaking down complex academic articles and transcripts to providing a digest of incoming emails.

Question answering: Using specific documents or specialized knowledge bases (like Wolfram, arXiv or PubMed), LLMs can retrieve relevant information from storage and articulate helpful answers). If fine-tuned or properly prompted, some LLMs can answer many questions even without external information.

Data augmentation: LLMs can be used to generate synthetic data for use in machine learning. For example, an LLM can be trained to generate additional data samples that closely resemble the data points in a training dataset.

Virtual agents: Integrated with the right workflows, LangChain’s Agent modules can use an LLM to autonomously determine next steps and take action using robotic process automation (RPA).