Explainable AI : Black Box of Artificial Intelligence

Explainable AI is used to describe an AI model, its expected impact and potential biases.

What is explainable AI?

Explainable Artificial Intelligence (XAI), through its framework and set of tools, helps product managers and organizations provide a layer of transparency in a specific AI model so that users can understand the logic behind the prediction. XAI allows users to get an idea of how a complex machine learning algorithm works and what logic drives those models decision-making.

As AI becomes more advanced, humans are challenged to comprehend and retrace how the algorithm came to a result. The whole calculation process is turned into what is commonly referred to as a “black box" that is impossible to interpret. These black box models are created directly from the data. And, not even the engineers or data scientists who create the algorithm can understand or explain what exactly is happening inside them or how the AI algorithm arrived at a specific result.

There are many advantages to understanding how an AI-enabled system has led to a specific output. Explainability can help developers ensure that the system is working as expected, it might be necessary to meet regulatory standards, or it might be important in allowing those affected by a decision to challenge or change that outcome.

Why explainable AI matters

AI has the power to automate decisions, and those decisions have business impacts, both positive and negative, on both large and small scales. While AI is growing and becoming more widely used, a fundamental problem remains: people don’t understand why AIs make their own decisions, not even the developers who create them.

AI algorithms are sometimes like black boxes; that is, they take inputs and provide outputs with no way to understand their inner workings. Developers and users ask themselves questions such as: How do these models reach certain conclusions? What data do they use? Are the results reliable?

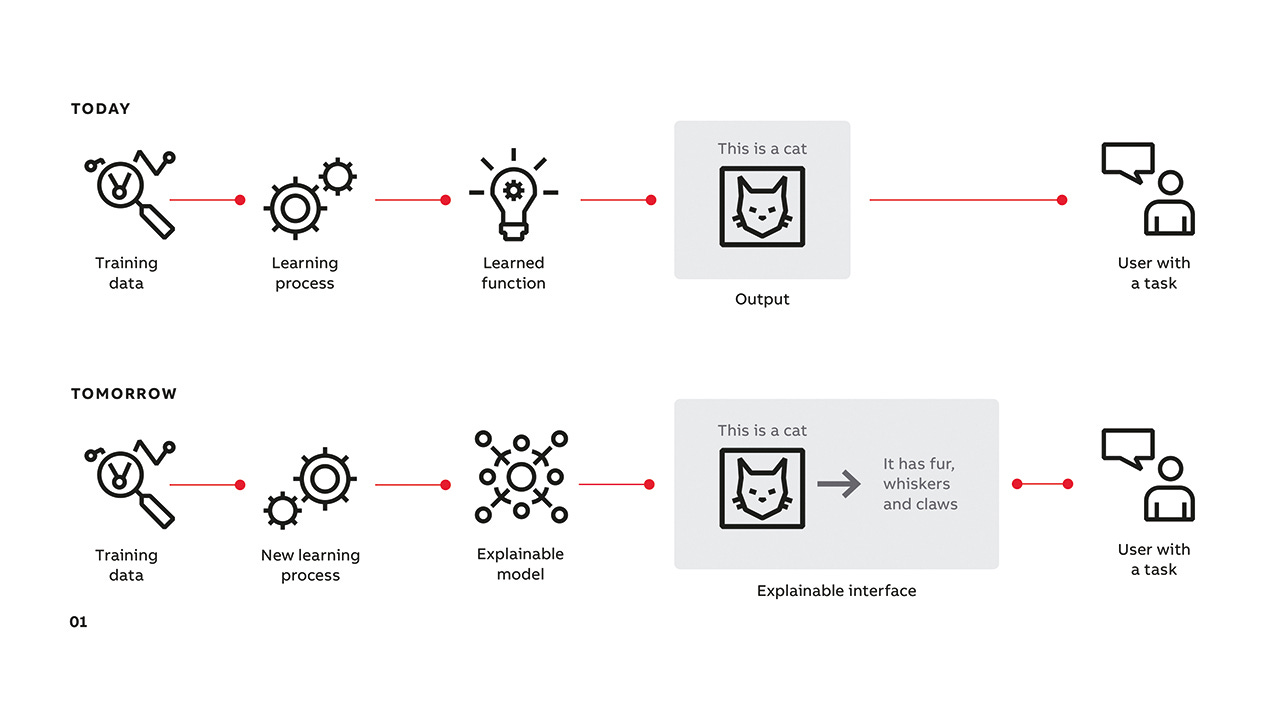

Unlike black-box AI models, which often function as opaque decision-making systems, explainable AI aims to provide information about the model’s reasoning and decision-making process. The architecture in the following image represents the typical structure of an explainable AI system.

Here are some points to consider:

Accountability: As AI becomes integrated into critical decision-making processes, understanding how these decisions are made becomes crucial. XAI fosters accountability by enabling us to trace responsibility for outcomes back to the data and algorithms involved.

Societal Impact: AI algorithms can perpetuate societal biases if not carefully monitored. XAI helps us identify and mitigate these biases, promoting fairer AI systems.

Model Improvement: By understanding how features contribute to predictions, XAI allows data scientists to refine models for better performance and accuracy.

Principles of Explainable AI

NIST has developed four key principles of explainable AI.

Transparency: There needs to be a level of understanding into how AI systems arrive at their outputs. This is achieved by providing explanations.

User-Centric Design: Explanations should be tailored to the audience. Not everyone needs the same level of technical detail.

Accuracy of Explanation: The provided reasons behind an AI's decision should be truthful and reflect the actual process.

Understanding Limitations: AI systems should be deployed with a clear understanding of their capabilities and limitations.

Overall, these principles aim to build trust in AI systems by making their decision-making processes more transparent and easier to understand. This is crucial for ensuring responsible and ethical use of AI technology.

Explainable AI (XAI) process in 5 steps:

Explainable AI (XAI) isn't magic for peeking inside a black box, but rather a method for building trust and understanding in AI systems. It's a multi-step process that works like this:

Define Goals and Needs: Start by understanding why you need an XAI model. Is it for regulatory compliance, debugging, or building trust with users? This will guide the type of explanations you need and the techniques used.

Choose the Right XAI Technique: Different XAI methods exist, each with its strengths. For instance, SHAP values explain feature importance for a specific prediction, while LIME provides reasons for a single instance's outcome. Consider the model type, data, and target audience when selecting a technique.

Train and Evaluate the Model: Build your AI model while keeping explainability in mind. There might be trade-offs between accuracy and interpretability. Evaluate both the model's performance and its ability to be explained.

Generate Explanations: Use the chosen XAI technique to extract explanations from the trained model. These explanations could be feature importances, decision rules, or counterfactual examples (showing how a slight change in data would affect the outcome).

Communicate Insights: Present the explanations in a way that stakeholders can understand. This might involve visualizations, text summaries, or interactive tools depending on the audience's technical background.

XAI is an ongoing process. As the model is used in real-world scenarios, you may need to refine explanations and update the model based on new data or feedback.

Explainability vs. Interpretability in AI

Interpretability"level of understanding how the underlying AI”

Explainability"level of understanding how the AI-based system ... came up with a given result”

Explainability and interpretability are often used as synonyms when discussing artificial intelligence in everyday speech, but technically, explainable AI models and interpretable AI models are quite different.

An interpretable AI model makes decisions that can be understood by a human without requiring additional information. Given enough time and data, a human being would be able to replicate the steps that interpretable AI takes to arrive at a decision.

In contrast, an explainable model is so complicated that a human being wouldn’t be able to understand how the model makes a prediction without being given an analogy or some other human-understandable explanation for the model’s decisions. Theoretically, even if given an infinite amount of time and data, a human being would not be able to replicate the steps that explainable AI takes to arrive at a decision.

Explainability Methods

XAI is a method that helps humans understand how the output is created by the machine/deep learning algorithm. It contributes to quantifying model correctness, fairness, and transparency and results in AI-assisted decision-making

Model-Agnostic: These methods don't require knowledge of the specific model's internals. Examples include:

LIME (Local Interpretable Model-agnostic Explanations): Explains predictions by fitting a simpler model that is easier to understand in the local vicinity of the data point of interest.

Anchors: Explains predictions by finding decision rules that define regions in the feature space where the prediction holds.

Model-Specific: These methods leverage knowledge of the model's architecture. Examples include:

LRP (Layer-wise Relevance Propagation): Distributes the credit for the prediction among different layers and elements of the model.

Deep Taylor Decomposition (DTD): Provides a theoretical foundation for LRP, explaining relevance through Taylor expansion.

Other Methods:

GraphLIME: Applies LIME's concepts to explain predictions made by graph neural networks (GNNs) on graph data.

Prediction Difference Analysis (PDA): Estimates the relevance of a feature by measuring how the prediction changes when the feature is missing.

TCAV (Testing with Concept Activation Vectors): Analyzes how strongly a specific concept influences the classification made by a convolutional neural network.

XGNN (Explainable Graph Neural Networks): Provides explanations for graph classifications using a post-hoc method based on reinforcement learning (RL).

SHAP (Shapley Values): Explains the contribution of individual features to a model's prediction for a specific data point.

SHAP in detail: SHAP decomposes the difference between a model's prediction for a particular data point and a baseline value (often the expected value) and assigns portions of this difference to different features. It fulfills desirable properties like explaining how features interact and ensuring features with no influence get a zero attribution. However, calculating SHAP values can be computationally expensive for complex models.